I’ve been diving deeper into prompt engineering (or prompt design) lately. Like most things on the internet, there’s no shortage of resources—free courses, general tips, videos, and more. The early focus on simply crafting prompts makes sense, but over time, the real value will shift toward specificity and personalization of responses driven by user feedback.

Why? Prompt engineering might sound like a lofty skill or role, but in reality, it’s surprisingly accessible. It feels destined to become a baseline expectation rather than a specialized craft. Techniques like chain-of-thought prompting or prompt chaining are easy to understand and apply. In it’s current form, I don’t think prompt engineering has a future.

What’s more compelling to me is the role personalization and feedback will play in shaping large language model (LLM) behavior. Some personalization already exists—for example, LLM memory features that remember details from past chats to save users from repeating themselves. But the real question is: when will LLMs adapt to a user’s unique intelligence profile or preferred reasoning style?

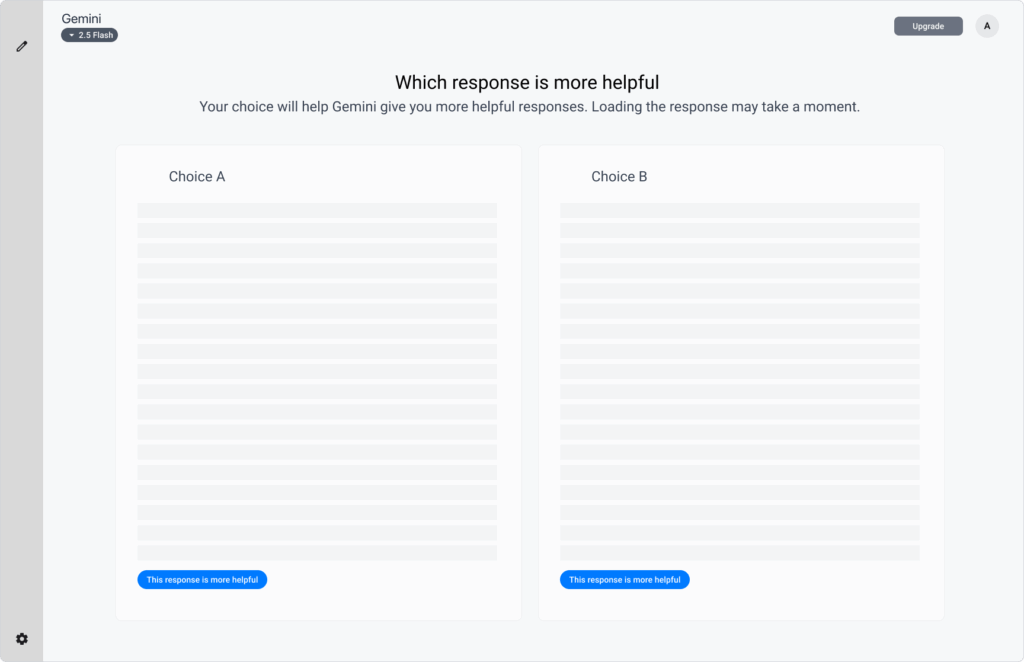

We’re starting to see early user research in this direction. Gemini, for instance, recently asked me, “Which response is more helpful?”—prompting me to choose between two replies that differed mainly in sentiment (positive vs. negative). What’s surpassing is that prompt only appeared on desktop. If the future of LLMs is shaped by the nuances of how we think, not just what we ask, then personalization may be the new frontier of prompt engineering.